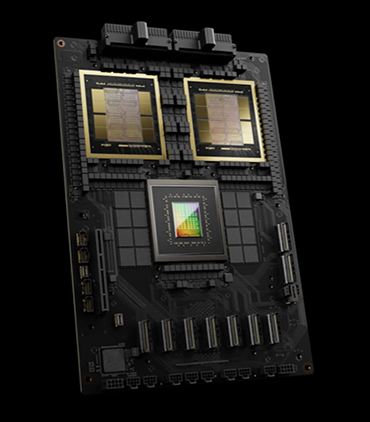

The second-generation Transformer Engine in the NVIDIA Blackwell architecture features FP4 precision enabling a massive leap forward in accelerating inference. The NVIDIA HGX B200 achieves up to 15X faster real-time inference performance compared to the Hopper generation for the most massive models such as the GPT-MoE-1.8T.

The faster, second-generation Transformer Engine which also features FP8 precision, enables the NVIDIA HGX B200 to achieve up to a remarkable 3X faster training for large language models compared to the NVIDIA Hopper generation.

With support for the latest compression formats such as LZ4, Snappy, and Deflate, NVIDIA HGX B200 systems perform up to 6X faster than CPUs and 2X faster than NVIDIA H100 Tensor Core GPUs for query benchmarks using Blackwell’s new dedicated Decompression Engine.

The NVIDIA GB200 NVL72 introduces cutting-edge capabilities and a second-generation Transformer Engine that significantly accelerates LLM inference and training workloads, enabling real-time performance for resource-intensive applications like multi-trillion-parameter language models.

The NVIDIA GB200 NVL72 introduces cutting-edge capabilities and a second-generation Transformer Engine that significantly accelerates LLM inference and training workloads, enabling real-time performance for resource-intensive applications like multi-trillion-parameter language models.

Paired with NVIDIA Quantum-X800 InfiniBand, Spectrum-X Ethernet, and BlueField-3 DPUs, GB200 delivers unprecedented levels of performance, efficiency, and security in massive-scale AI data centers.

TOPMOST was one of the first cloud platforms to bring NVIDIA HGX H100s online, and we’re equipped to be among the first NVIDIA Blackwell providers.

When you’re burdened with infrastructure overhead, you have less time and resources to focus on building your products. TOPMOST’s fully-managed cloud infrastructure frees you from these constraints and empowers you to get to market faster.

TOPMOST ensures your valuable compute resources are only used to run value-adding activities like training, inference, and data processing. This means you’re getting the best performance out of your resources without sacrificing performance.